Building an Immaculate Grid Puzzle Solver

There's a special kind of embarrassment that comes from working in baseball research but being terrible at baseball trivia. I grew up loving the game of baseball, not its history. One game that constantly reminds me just how little baseball history I actually know is Immaculate Grid, a daily baseball trivia game. It swept through our office intern group, becoming a hyper-competitive ritual each morning. I hated it.

"List a player who won MVP and played for the Reds." These decepively difficult trivia questions would leave my mind blank. I would often resort to looking up answers just to avoid posting a humiliating score. Even with some help, I usually scored around 500 out of a possible 900. For context: in Immaculate Grid, a lower score is better, and the office average was around 100. Brutal.

After enough mornings spent flailing, I figured if I couldn't beat the game with memory, maybe I could with code. So I started mapping this trivia challenge into a software engineering problem, the kind I actually know how to solve.

The Problem

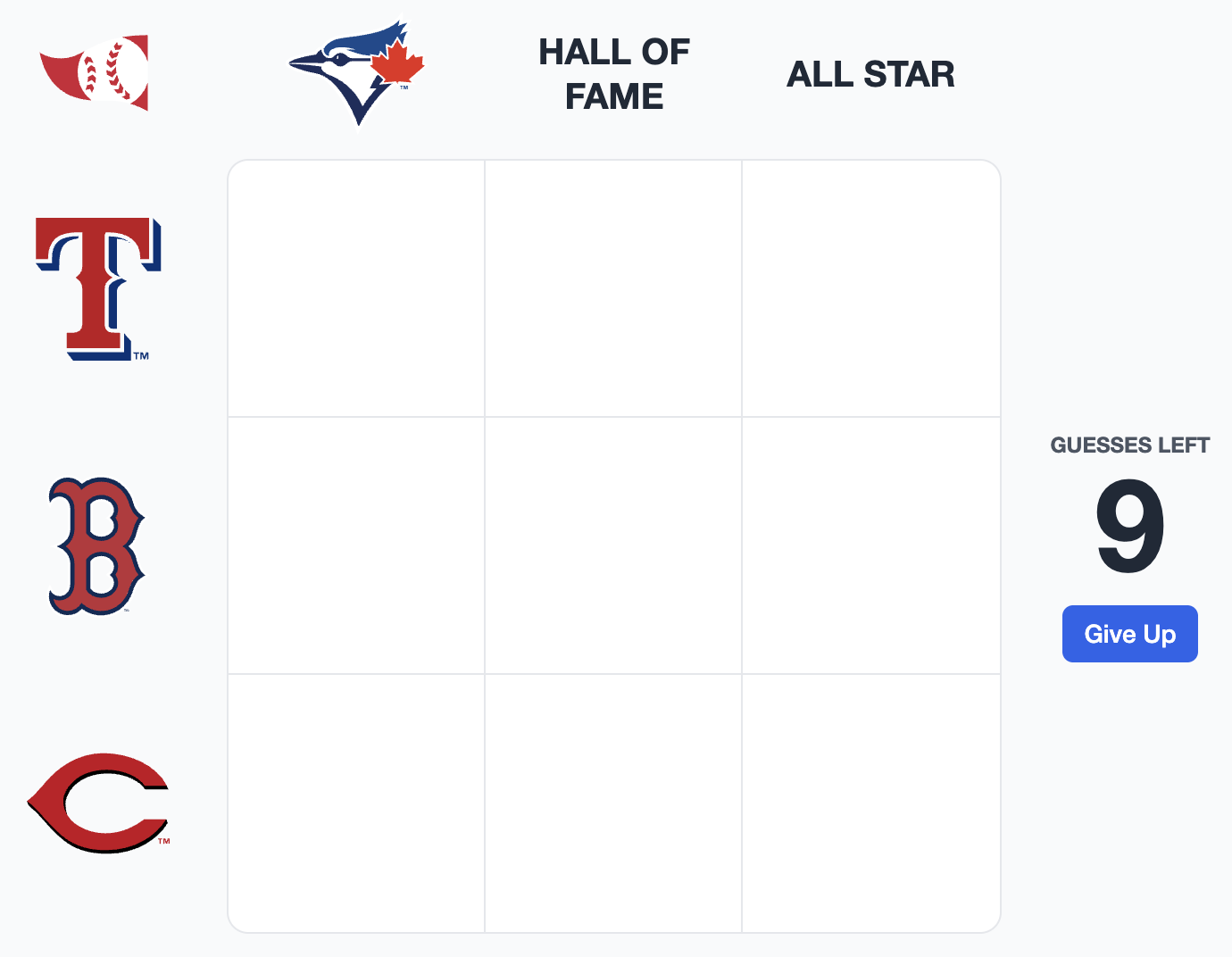

Immaculate Grid is beautifully simple in concept. Each day presents a 3×3 grid where the intersection of any row and column creates a unique question. For example, the first Immaculate Grid puzzle ever created (hint: click the image) is shown below. To complete the middle cell, you would need to provide a player who played at least one game for the Boston Red Sox and is also in the Hall of Fame.

Accuracy is only part of the score calculation. Correct answers contribute to a "rarity" score, calculated as the percentage of players who chose the same answer as you. An incorrect answer automatically results in a 100% rarity score. Your total score is the sum of all your individual percentages, with a minimum score of 0 and a maximum score of 900.

What frustrated me wasn't just my poor performance, but also the inefficiency of the process required to find valid answers. I'd end up with a browser full of tabs, cross-referencing Baseball Reference pages, team rosters, and award histories. For someone who had been working in baseball operations and data engineering, this felt like exactly the kind of problem that should be solvable with code.

The Foundation

The inspiration struck me during a flashback to an introductory data analysis course where I had worked with Sean Lahman's baseball database. At the time, I had been impressed by one seemingly minor detail: the database included Baseball Reference player IDs. These IDs create direct links to players in the most comprehensive baseball statistics site on the internet: baseball-reference.com.

I didn't know what those IDs would be useful for back then, but they became the secret sauce that made this entire project possible. Generating unique player identifiers is a non-trivial task when you're dealing with names like "Bob Miller" (there have been multiple major leaguers with that exact name). Having this foundation already built meant I could focus on the fun parts, since manual player mapping is not at all fun.

The database's structure was perfect for this use case. It contained decades of player statistics, team affiliations, awards, and biographical information. Essentially, it had everything needed to answer Immaculate Grid questions. Better yet, it was both free and open source.

Initial Rails Backend Work

While I was familiar with Rails from work, I had never built something from scratch for myself. I started with the standard Rails 8.0 generator, embracing all the modern conveniences: built in authentication, Solid Cache for performance, and SQLite for simplicity.

I had already written some basic SQL queries to answer common Immaculate Grid questions before my development of this new Rails backend began. During the start of my internship in baseball research, I was granted read access to the entire research database. One schema included the 2023 version of the Lahman database, which I used to practice new SQL skills like CTEs and window functions. One of the first queries I created identified players who played for two teams. For example, a question like "Find players who played for both the Yankees and Red Sox" could be answered with a query like the one below.

-- Simplified example of 'New York Yankees + Boston Red Sox'

WITH target_teams AS (

SELECT 'NYA' AS team_id

UNION ALL

SELECT 'BOS' AS team_id

)

SELECT a.player_id

FROM Appearances a

JOIN target_teams t ON a.team_id = t.team_id

GROUP BY a.player_id

HAVING COUNT(DISTINCT a.team_id) = 2;This query template could solve approximately 50% of all Immaculate Grid questions. Not bad for a random query I had written almost a year prior. I created a simple API endpoint to take in the cell conditions (for example, the two teams in question) and return a list of Baseball Reference player IDs. It wasn't much, but it was something to build upon.

Deploying the Rails Backend

I had worked a small amount with AWS through my job (including earning one AWS certification), so deploying this project using Kamal to a free tier EC2 instance was relatively painless due to the excellent Rails Getting Started Guides. Before long, my simple endpoint was on the internet.

However, not everything about the deployment process came as easily. One area I struggled with later in the project was the existence of rake tasks from within my Rails app. Rake tasks are ad hoc scripts that can be run from the command line and are often linked to database processes like setup and migrations. Because of this, it made sense to use rake tasks to transform the Lahman database tables (now called "Rails data models") into more efficient and useful objects. This includes steps like adding indexes and columns with heavy preprocessing steps, such as reading and transforming CSV data.

While it's trivial to add CSV files to a project locally, understanding where to move the same files in the production server is not so straightforward. Not only are you running on a remote server, but the app itself is running within a Docker container. While I had options to utilize Docker volumes and Docker specific scp tools, I instead just bundled all required CSV files into the Docker image. This was the most straightforward approach, and given that the entire size of all required CSV files is less than 30MB, I found this to work for me. As the CSV file list expands or the size of the image becomes unwieldy, we may need to reevaluate this decision.

Designing the API

As I expanded beyond team-to-team queries, I realized I was building something more ambitious than a simple lookup tool. Immaculate Grid uses five main condition types:

- Team (Yankees, Red Sox, Cubs)

- Stat (300+ HR, 3000+ H, 20+ wins)

- Position (Pitcher, Catcher, First Base)

- Award (MVP, Cy Young, Gold Glove)

- Player (Hall of Fame, Born Outside US 50 States and DC)

Since any condition can cross with any other condition (including itself), this creates 15 unique question patterns. I faced a choice: build one monolithic service to handle all combinations, or create 15 specialized services to solve their own unique question conditions.

I chose the latter. Each service became a Plain Old Ruby Object (PORO) in app/services/questions/, handling the specific logic for its combination. TeamStatService handles "New York Yankees + 300 HR". AwardAwardService handles "MVP + Gold Glove". And so on.

This pattern provided clean separation of concerns and made debugging infinitely easier. When a specific question type failed, I knew exactly which service to examine. Given that the number of condition combinations is finite at 15 (knock on wood), this feels like a very defensible position given the benefits.

Pattern Matching and Query Building

Each service follows a consistent pattern:

- Match conditions - Parse the question to identify condition types

- Build query - Construct SQL with Common Table Expressions (CTEs) to identify player matches

- Execute and format - Return formatted player data, including Baseball Reference links and biographical information

The matching process involved one of two approaches. The simple approach is to use a lookup against a preprocessed dictonary of condition values. For example, when we are trying to match to a team like the "New York Yankees", we look up the team name in the keys of a lookup dictionary, with the value being their corresponding Baseball Reference IDs. This is fast and efficient, but not always possible. The other approach is to use regex pattern matching to identify condition types. The "Stat" condition type is a good example of this. It doesn't make sense to list each possible stat condition in a dictionary (like "300+ HR Career", "275+ HR Career", etc.). Instead, we can use a regex pattern to identify the stat type and value. For example, the regex /(\d+)\+\s([A-Z]+)\s(Career|Season)/ will match "300+ HR Career" and return the value 300, the stat type HR, and the condition type Career.

Once the inputs have been matched and the relevant data has been extracted, the next step is to build the SQL query. The most common query type are two independent queries that find their own results and then intersect those results to find the players that match both conditions. For example, to find players who played first base and also won a silver slugger award, we would write two queries, one to find all first basemen, and another to find all silver slugger winners. If a player was in both sets, they would be returned. This happens in nearly every service type that does not include a "Team" condition. The makers of Immaculate Grid gave additional conditions on this question type. If we had the question "Boston Red Sox + 20+ HR Season", not only would you have to find players that match each condition, but the stat had to have been achieved while rostered on that team. This slightly complicates the queries, but in general the complexity is handled locally within each service.

Finally, we have query execution and formatting. Query execution is straightforward using the Rails built in SQLite database. The connections and configurations are pre-built for you, resulting in a smooth and painless querying process. Coming from a background in data engineering, this is not something I have taken for granted. The formatting of this data happens mostly in the sql queries themselves. We add attributes to each returned player ID, like their names and ages and length of career. A common ending to many of the queries in the question services look like the example below.

WITH matched_conditions AS (

-- Logic to find matching players ...

)

SELECT

CONCAT(p.name_first, ' ', p.name_last) AS name,

p.primary_position AS position,

SUBSTR(p.debut, 1, 4) || '-' || SUBSTR(p.final_game, 1, 4) AS pro_career,

2025 - p.birth_year AS age,

ROW_NUMBER() as lps,

p.bbref_id

FROM matched_conditions mc

LEFT JOIN people p ON p.player_id = mc.player_id

ORDER BY p.bwar_career IS NULL DESC, p.bwar_career DESC, age DESC;

Chrome Extension Development

With a working Rails API, I needed a way for users to actually benefit from this system. Developing a Chrome extension was appealing since it could add DOM elements directly to the Immaculate Grid website HTML without requiring users to leave the page. It also had the added benefit of being free and simple for potential users to access.

Our extension blends right into the site: on page load, its content scripts scan the HTML to pick out all nine question IDs, bundle them into a GET request, and fetch the answers from our API. When you click a grid cell, the script spots the site's search modal opening, injects a compact UI panel under the search bar, and populates it with a neatly formatted table populated from the response of the previously sent GET request. All components, from the popup to the service worker and content scripts, stay in sync using the Chrome extension API's internal messaging layer, making the whole experience smooth and responsive.

The extension evolved from a simple result viewer to a full fledged injection tool with features like auto hiding solutions, light/dark mode support, and clickable links that autofill the search bar.

The Data Completeness Problem

After developing all 15 services using the Lahman database, I hit a significant roadblock: missing data. The most glaring omission was bWAR (Baseball Reference Wins Above Replacement), despite the database including Baseball Reference player IDs.

This sent me down a web scraping rabbit hole. I found two Baseball Reference pages that provided partial solutions:

Rather than try to match seasonal WAR values to existing batting/pitching tables, I opted for Boolean attributes on the People model. Questions like "6+ bWAR season" or "40+ career bWAR" became simple lookups against flags preprocessed from rake tasks. Since neither source is complete on the entire list of all MLB players, we set this Boolean flag to a default of False. This means that not all potential solutions are present for these condition types, but its enough to get started with.

This pattern extended to other missing conditions, albeit with complete data sets: players in the Hall of Fame, World Series champions, players with careers on only one team, pitchers with no hitters. Rake tasks handled all the preprocessing, transforming complex historical lookups into fast Boolean checks on the People data model.

The Debugging Marathon: From 88% to 98%

Once I had scraped the content from all historical Immaculate Grid puzzles, I could run my API service through a comprehensive testing suite. The initial accuracy was encouraging at 88% of all historical questions asked. A question passes if the endpoint returns a non empty response.

The debugging process took three days. I wrote exhaustive tests for all 15 service combinations, tracking exactly which patterns failed and why. The test suite became a diagnostic tool:

TEST_CASES = [

{

service_class: Questions::AwardAwardService,

question: "MVP + Gold Glove",

description: "Award + Award",

expected_pattern: "award_award"

},

# ... 14 more test cases covering every combination

]Each test verified both pattern matching and result quality. Failed tests revealed edge cases in condition parsing, missing data relationships, and query logic errors. Through systematic debugging, I improved accuracy from 88% to 98%.

The Final 2%: When Perfect Isn't Possible

The remaining 2% of failures taught me about the messiness of real-world data. Some issues stem from the complexity of baseball itself:

- Franchise vs. Team ID problems: Questions about the Miami Marlins or Washington Nationals sometimes fail due to how franchise relocations are handled in the database.

- Data availability limits: Conditions like "Played in Negro Leagues" or "First round draft pick" simply aren't in the Lahman database and have no publicly available records with Baseball Reference "playerID" column values.

Rather than chase perfect accuracy, I accepted that 98% represents the practical limit given the available data sources. The remaining failures are well-documented and reflect the inherent ambiguity in baseball history. Future improvements will focus on expanding data sources to capture these edge cases, though some conditions may remain unmappable due to data availability constraints.

Performance and Caching

The final production system includes comprehensive caching for all 832 historical Immaculate Grid puzzles. With a maximum of 100 players per question and average responses of 450 to 600 players per puzzle, storing these as JSON files is manageable even on a small EC2 instance.

The cache expires after 30 days, with Kamal mechanisms to reset it easily during deployments. This approach means most queries return instantly, making the Chrome extension feel responsive even when processing complex multi-table joins.

Lessons Learned

This project taught me a tremendous amount about full stack development. Key takeaways:

Start Simple, Then Expand: Beginning with team to team queries gave me a working foundation to build upon. Perfect is the enemy of good, especially in personal projects.

Architecture Matters: The 15 service approach felt over engineered at first, but it made debugging and feature addition much easier. When you're building alone, code organization is your future self's best friend.

Data Quality Is Hard: The jump from 88% to 98% accuracy required more work than getting to 88% in the first place. Debugging is key.

AI Tools Shine in Iteration: Chrome extension development with AI assistance was remarkably effective. The ability to quickly prototype, test, and refine meant I could focus on the logic rather than getting bogged down in API documentation.

Personal Projects Have Different Standards: Unlike work projects, I could make opinionated choices about scope, technology, and features. This freedom accelerated development and made the work more enjoyable.

The Solution

This system now resolves 98 percent of Immaculate Grid puzzles with near instant responses, thanks to comprehensive caching, clear service separation, and a user interface that blends seamlessly into the immaculate grid web page. Future enhancements might include collecting the remaining 2% of missing data, building an archive of completed puzzles for rarity score analysis or offering personalized sorting and filtering options. For now, the current solution delivers on my core objectives.

I now possess the ultimate Swiss Army chainsaw for conquering the daily immaculate grid puzzle. Before gaining instant access to this table of solutions, I often struggled to achieve 7 out of 9 correct guesses. Now, my concern is achieving a rarity score of less than 7. It's available within a two click install on any computer running Chrome. Like a good Utility Man, it's always there when you need it. Install from Chrome here.